Adventures in Sampling Points on Triangles (Part 1.5): Reader Mail and Followups

It’s a mixed bag, not having comments on the blog here. I figure that I save a bunch of time and annoyance by not having to filter spam, but on the other hand, allowing comments would allow many-to-many discussion of posts, rather than the many-to-one and then one-to-many that we get from people emailing me and then my writing another post that summarizes those discussions. As far as I can tell, there aren’t any particularly good options for allowing comments (especially given that I want to host this thing myself and am partial to a static website) and so here we are, back in one-to-many land.

Last time I said that we’d talk about sampling spherical triangles next, but I got pulled into a few interesting email threads after the first post, so instead we’ll discuss a few of those here.

Improving Performance (Andrew Willmott)

Andrew Willmott came up with an optimized implementation of Basu and Owen’s algorithm; it takes advantage of the redundancy in the three barycentric coordinates (i.e., that in barycentric space, they’re the vertices of an equilateral triangle with side length that depends on which digit is currently being processed.) Given some additional attention, that insight leads to a branchless implementation that gives exactly the same results as my earlier one.

std::array<Float, 3> LowDiscrepancySampleTriangle(Float u) {

uint32_t uf = u * (1ull << 32); // Fixed point

Float cx = 0.0f, cy = 0.0f;

Float w = 0.5f;

for (int i = 0; i < 16; i++) {

uint32_t uu = uf >> 30;

bool flip = (uu & 3) == 0;

cy += ((uu & 1) == 0) * w;

cx += ((uu & 2) == 0) * w;

w *= flip ? -0.5f : 0.5f;

uf <<= 2;

}

Float b0 = cx + w / 3.0f, b1 = cy + w / 3.0f;

return { b0, b1, 1 - b0 - b1 };

}

Andrew also notes that it’s possible to do even better: the step of

reversing the bits of the sample index and dividing by 232 to

get a van der Corput point to pass in to LowDiscrepancySampleTriangle()

can be skipped, and then in turn, the loop can be modified to process the

digits in “backwards” order.

His improved version gives a 2.34x speedup on the CPU on my system here. I’d expect the benefit to be even greater on a GPU, thanks to the implementation maintaining fully coherent execution.

An Improved 2D Warp (Eric Heitz and Justin Talbot)

Apparently sampling triangles is in the air; Eric Heitz recently posted a tweet with a link to a write-up describing a new warping from barycentric coordinates to points on triangles. The basic idea is to compress diagonals of the square sampling domain that are perpendicular to triangle’s diagonal until they end at the triangle’s diagonal. As a bonus, that mapping is more efficient than the traditional mapping, since no square root is necessary.

The implementation is straightforward; for the record, here’s my version of his algorithm:

std::array<Float, 3> UniformSampleTriangle(const Point2f &u) {

Float b0 = u[0] / 2, b1 = u[1] / 2;

Float offset = b1 - b0;

if (offset > 0) b1 += offset;

else b0 -= offset;

return { b0, b1, 1 - b0 - b1 };

}

As I was reading Eric’s write-up, something tickled in my brain and I dug through old email; I found a message from 2011 from Justin Talbot, describing the same approach and asking if I knew if it was new. It was one of those emails where I thought to myself, “huh, that’s interesting—I should think about this carefully before I reply.” To my shame, I never did take the time to think it through, and never replied. (Once again, my haphazard email habits do their part to impede progress.)

Eric’s write-up has a number of nice visualizations of sample points and warped grids and seems to make a pretty clear case that the characteristics of the distortion with the new mapping are better than the traditional one (where we saw last time how things get extra stretched in one of the triangle corners.)

Since Eric showed triangles and points on them, I figured that I’d measure rendering error, with the same test scene as last time, still at 16 samples per pixel. The results were… interesting.

| Warping | Sampler | MSE Error | Error : Basu-Owen |

|---|---|---|---|

| sqrt | pmj02bn | 2.450e-5 | 2.15x |

| sqrt | stratified | 3.230e-5 | 2.83x |

| Heitz-Talbot | pmj02bn | 2.456e-5 | 2.15x |

| Heitz-Talbot | stratified | 2.254e-5 | 1.98x |

The winner is… The Heitz-Talbot warping with stratified sampling? I can’t see any reason why stratified sampling would offer an improvement over pmj02bn points, since those are also 4x4 stratified (and then more). Yet, that’s what the numbers say—stratified seems to be the best, at least in this case. I suspect that this result either points to a bug in my pmj02bn sample generation code (which seems unlikely–I do actually, like, verify that the sample points are stratified all the ways they’re supposed to be), something subtle and interesting going on with the Heitz-Talbot mapping, or some other gremlin.

One other thing to note is that with pmj02bn points, both mappings have essentially the same error. I suspect that this is an indication of the fact that pmj02bn points satisfy all of the elementary intervals, which in turn makes them resilient to stretching from mappings like these. (So maybe my implementation of them isn’t buggy after all.)

All of that doesn’t matter too much in this case, though, since Basu-Owen still wins handily (with all the caveats still of “in this one test scene with a big area light”.)

Randomization and Structure (Per Christensen)

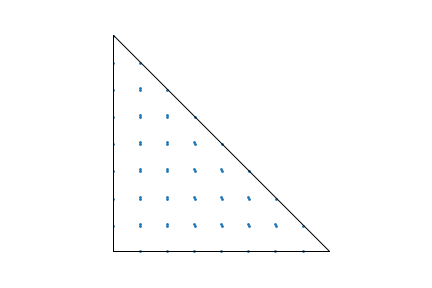

Per Christensen sent along a number of interesting observations, only one of which we’ll get to here today. He noted that using Cranley-Patterson rotation to randomize the 1D sample points before applying Basu and Owen’s mapping might not be the best idea. In particular, he pointed out that with an offset of 0.041630, you get these points:

64 sample points with Basu and Owen's mapping, randomized by applying a

Cranley-Patterson rotation of 0.041630 to the 1D van der Corput sample

points.

It feels fairly safe to say that that’s not an awesome set of sample points.

Per’s observation got me excited and I started hoping that I could see even better results by improving the randomization. First I tried simple random digit scrambling, based on Kollig and Keller’s method; it’s just a matter of computing a random 32-bit hash at each pixel and then for each sample, XORing the sample’s index with the pixel’s hash before reversing the bits and dividing by 232 to get a sample in [0,1).

Here’s the result, compared to Cranley-Patterson rotation using a blue noise texture. Like last time, the images are rendered at 16spp, using pmj02bn points, and multiple importance sampling.

(Click on the tabs to switch images, or use the number keys after selecting the image. Hit ‘f’ to go fullscreen. You can also pan and zoom using the mouse.)

You can’t see much difference; their errors are within 1%. I like the image with the blue-noise based Cranley-Patterson rotation better, thanks to blue noise, the human visual system, and all that.

I then spent some time trying to implement a full and proper Owen random digit scrambling, but was only able to make images with much higher error. I assume there is some dumb bug in my code, but will cut bait on that for now in the interests of getting this posted.

Next time, a little more reader email to get through and then, I hope, finally, spherical triangles.