On to the next thing

I’ve been a long-time skeptic about ray tracing for interactive rendering. Whenever the topic’s come up, I’ve always been on the side of “I wish, but it just doesn’t make sense for real-time rendering”. It’s certainly not that I don’t like ray tracing, but for a long time, I felt that most of the arguments that were made in favor of it never really stood up to close scrutiny.

I don’t want to go through all of them (in part because smoke will start to come out of my ears), but just to mention a few:

-

Rasterization is a hack. This is the worst one. Rasterization is a wonderfully efficient algorithm for computing visibility from a single viewpoint. That’s an awfully useful thing. And by the way, for the past 30 years, it’s done that robustly, without cracks along shared triangle edges—something that has just recently been solved for ray tracing.

-

Rasterization is only fast because it has dedicated hardware. The implication being that ray tracing could be just as fast, just given a few transistors. This one misses the substantial advantages of coherent and limited memory access with rasterization (both texture and framebuffer) and ignores the substantial computational advantages of computing visibility from a single viewpoint rather than from arbitrary viewpoints.

-

Ray tracing has O(log n) time complexity while rasterization is O(n) so it wins for sufficiently complex scenes. Apparently, the fact that culling and LOD can be and is applied with rasterization renderers is not universally understood.

Now, ray tracing does offer something special: because it can answer arbitrary visibility queries (“is there an occluder between two arbitrary points” or “what’s the first object visible from an arbitrary point in an arbitrary direction”), it’s well suited to computing global illumination and illumination from large area light sources using Monte Carlo integration. Both of those lighting effects are hard to simulate with a rasterizer because the visibility computations required are inherently incoherent.

As desirable as they are, these effects come with two big challenges:

-

The rays to be traced are incoherent. Many of the early interactive ray tracing demos mostly traced coherent rays, starting at the camera or ending at a point light source. These can be traced much more efficiently than arbitrary rays, using techniques like packet tracing, frustum tracing, and the like. Impressive early ray-tracing demos with coherent rays gave a misleading sense of what the performance would be with incoherent rays.

-

To get good results for global illumination and complex lighting, you generally need to trace hundreds of rays per pixel. Even today, graphics hardware can deliver maybe a handful of rays traced per pixel—nowhere near as many as are needed for a noise-free result.

To me, it seemed like it would be a long time before the gap between what the hardware could deliver and what was needed for high-quality interactive ray-traced images would ever close.1 Given the slowing of Moore’s law, I feared it might never close. Therefore, I always believed that it was reasonable to just use additional transistors and computational capability for programmable GPU compute rather than for ray tracing.

And that was fine; the world’s real-time graphics programmers have shown an amazing ability to make use of more computation in the rasterization context. Ray tracing doesn’t have to be used everywhere.

Things they are a-changing

At a gathering at SIGGRAPH last year, I heard Brian Karis casually say that obviously path-tracing was the future of real-time graphics. It was the first time I’d heard a legit world-class game developer say that sort of thing. I’d heard others agree “sure, we’d trace rays if it was free” when prodded by the real-time ray-tracing evangelists, but that’s a whole different thing than saying “this is the future, I want this, and it’s going to happen”. The comment stuck with me, though I imagined it was a long-term view, rather than something that would start happening soon.

But then one of the most exciting recent developments in ray-tracing has been the rapid advancement of deep convnet-based denoisers; among others, researchers at Pixar and NVIDIA have done really impressive work in this area. Out of nowhere, we now have the prospect of being able to generate high-quality images with just a handful of samples per pixel. There’s still much more work to be done, but the results so far have been stunning.

And then Marco Salvi’s fantastic SIGGRAPH talk on deep learning and the future of real time rendering really got the gears turning in my head; there’s a lot more beyond just denoising that deep learning has to offer graphics.

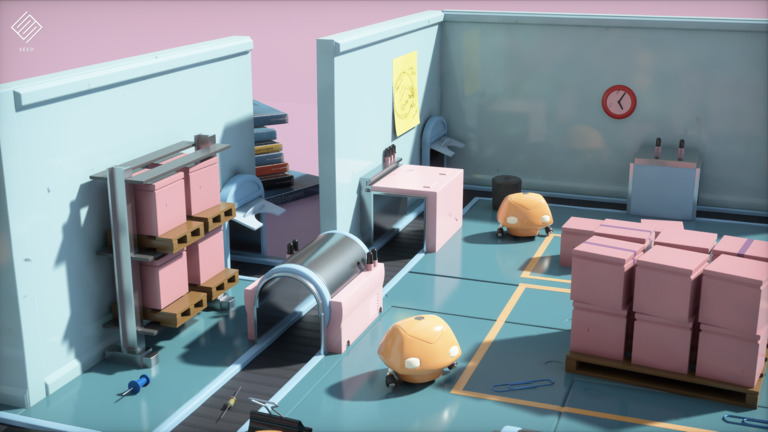

Finally, on top of all that there was the excitement of the announcement of DXR at GDC this year, accompanied by ridiculously beautiful demos from SEED and Epic. Ray tracing a first-class thing in the DX API, now something that graphics hardware vendors have reason to start working on accelerating: I had no idea any of that was in the pipeline and was floored by all of it as I saw it.

EA SEED’s gorgeous ray tracing demo.

Put it all together, and now I’m a convert: I think all of the pieces are there for real-time ray tracing to happen for real, in a way that will be truly useful for developers.

Joining NVIDIA

I believe that real-time rendering will be largely reinvented over the next few years: still with plenty of rasterization, but now with not just one, but two really exciting new tools in the toolbox—neural nets and ray tracing. I think that rapid progress will come as we revisit the algorithms used for real-time rendering and reconsider them in light of the capabilities of those new tools.

I wanted to be in the thick of all of that and to help contribute to it actually happening. Therefore, last Friday was my last day working at Google. I’m in the middle of a three-day spell of unemployment and will be starting at NVIDIA Research joining the real-time rendering group on Tuesday. I can’t wait to get started.

There are tons of interesting questions to dig into:

- How can other graphics algorithms be improved using neural nets or other techniques from machine learning?

- When rendering, when do you trace more rays and when do you use more neural nets?

- Can we sample better by having neural nets decide which rays to trace and not just reconstruct the final images?

- What’s the right balance between on-line learning based on the specific scene and training ahead of time?

- What does all this change mean for GPU architectures—how many transistors should spent on dense matrix multiply (neural net evaluation), how many specialized for ray tracing (and how), and how many on general purpose compute?

- How do you make that hardware friendly to programmers?

- What’s the right way implement complex graphics systems that are half neural nets and half conventional graphics computation?

- How do you debug complex graphics systems that are half-learned?

Given how much progress on these fronts has already been made at NVIDIA, I think many of the solutions will be worked out there in the coming years. There’s a veritable dream team of rendering folks there—many of whom I’ve really enjoyed working with previously and many others who I’ve always wanted to have the chance to work with. Add to that hardware architects who have consistently been at the forefront of GPU architectures for nearly twenty years, and it’s hard to imagine a more exciting place to be for this chapter in computer graphics.

note

-

A discussion of interactive ray tracing would be incomplete without mentioning Caustic, which had an innovative hardware architecture for ray tracing that reordered rays to improve memory coherence. They showed impressive ray-tracing performance on mobile-class GPUs but unfortunately the product side never worked out and the architecture never made it to the market in volume. ↩